The Race to Reproduce AlphaFold3

Since the release of AlphaFold 3 in May, a race has been underway to be the first to fully reproduce and open-source the model as quickly as possible.

Update: AlphaFold code has been released by DeepMind and is available here. There is also the fully open source Boltz-1 model available under the MIT license.

In May of this year, DeepMind and Isomorphic released AlphaFold 3, their latest model capable of accurately modelling all biomolecules of life. At the time, I wrote a blog post commenting on the model while tactfully avoiding the significant issue at hand—the lack of open-source code and trained model weights. Needless to say, there was a…bit…of…controversy over this.

In response, several groups have been racing to be the first to fully reproduce and, ideally, open-source the model in a widely accessible format, which would unlock significant benefits for the BioML and life sciences research communities and industry.

For months, the field remained relatively quiet—until a model release by a Chinese tech company set off a chain reaction of further releases, a sequence that continues to unfold (pun intended) as I write this. This article aims to track this evolving story and outline who has released what so far.

Much like AlphaFold 2 before it, I believe that open-sourcing these models will greatly accelerate progress in both biological and ML research, paving the way for a wealth of new research and applications.

#1 HelixFold3 effort

Links

The starting pistol in this race was fired by Baidu, a large Chinese technology company, with the release of their AF3 clone, HelixFold3. For those unfamiliar with the field, Baidu might seem like an unexpected first mover. However, they have a subsidiary (or at least a closely related entity) called PaddleHelix, which offers various BioML models as a service, and they collaborate with several partners, primarily Chinese biotech companies.

HelixFold3 was accompanied by a brief 6-page technical report on arXiv, with only 3 pages of actual text. The report provides no detailed description of the methods used, merely noting that they were “informed by insights from the AlphaFold 3 paper,” and offers little discussion of the results. Nonetheless, the model’s performance in protein-ligand docking appears to be just below that of AlphaFold3, and the predicted confidence metrics—crucial for scientists using AlphaFold2—seem to be well-calibrated to true error.

Its important to note of course that none of this has been independently verified yet, and the fact that their GitHub repo currently has 54, 57, 59 (and climbing) issues tells me it will be weeks not days until we see a quality independent benchmark work done.

Additionally, it’s worth mentioning that HelixFold3 does not yet support post-translational modifications, and the paper does not include experiments on antibodies. This omission is likely due to computational costs, as AlphaFold 3’s performance on antibodies only reached its peak when sampling from the model 1,000 times.

#2 Ligo Biosciences effort

Links

🖥️ Code

Ligo Biosciences, an AI-driven enzyme engineering startup founded by Oxford undergraduates currently participating in Y Combinator's summer cohort, has released a new implementation under the permissive Apache 2.0 License, available for both non-commercial and commercial use.

The project is still in its early phases, and while it is not yet production-ready, the team has successfully implemented a version of AlphaFold3 capable of single-chain protein predictions (although sampling code is not yet provided). It’s important to note that there is no support for ligand, multimer, nucleic acid predictions and there is no formal benchmarking/paper of the model (yet). It is also not clear what the max token length is.

While there are no proper benchmarks yet (and the example video does contain a pretty obvious chain break) it is interesting that they can produce samples with training on “only” 8 A100 GPUs for 10 hours. So there is some hope for the rest of us!

The commit history indicates that most of the development has been carried out by a single individual (kudos to Arda!).

The team also discovered some discrepancies in the AlphaFold 3 algorithms as described in the supplementary information of the paper—such as a missing residual connection and the order of modules in the MSA stack, which results in the last layer not contributing to structure prediction. These could be intentional engineering choices or minor typos in the paper. They’ve documented these technical differences in the README.

The company also used this release as an opportunity to announce a partnership with Basecamp Research, another TechBio startup that holds vast amounts of private sequence data sourced from exotic environments worldwide.

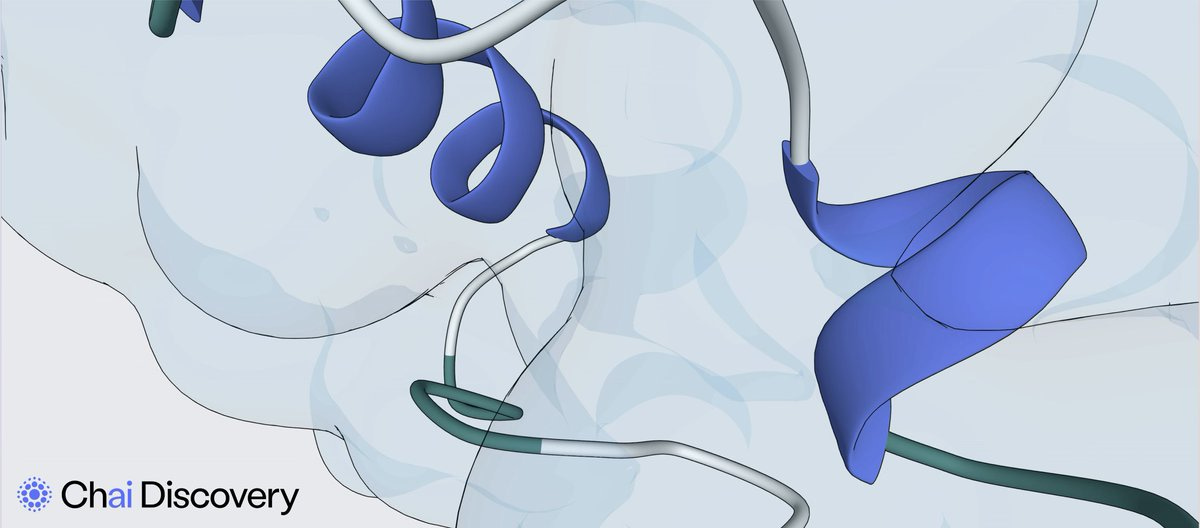

#3 Chai Discovery’s Chai-1

Links

🖥️ Code

Last but not least is Chai Discovery, a startup that had been operating in stealth mode until recently. Backed by OpenAI, Thrive, and Dimension Capital, they have just unveiled their model, Chai-1. This release is particularly exciting and came just a day before I started writing this article. Alongside the model, they also published a technical report, which offers some insights into their methodology and includes a thoughtful discussion of the results.

Chai Discovery has made their model available on GitHub for local use, though the source code itself isn’t provided—only the exported models are accessible. In my experience, it’s remarkably easy to use1, largely because the model leverages the protein language model ESM to compute protein embeddings, bypassing the need to construct an entire multiple sequence alignment (MSA), which is typically the most time-consuming part of running AlphaFold2.

Understandably, the code is only for non-commercial use and comes with a special license3 similar to the AF3 web server. However, they provide a web interface that seems completely open for any use, including commercial applications such as drug discovery. I don’t expect anyone will actually feed in any proprietary structures, I suppose they want industry folks to have a go then reach out about partnerships!

The performance seems great in many tasks, with performance on protein-ligand docking on being inline with AF3 on the PoseBusters set. Although there is a noticeable lack of comparison to AF3 for nucleic acids and antibodies. [EDIT: In fairness to them, the exact evaluation set used in the AF3 paper is not explicitly given (unlike PoseBusters), and Chai Discovery cannot benchmark AF3 on their own test set due to license limitations]. We do have an accurate estimate on compute costs however, with them using 128 A100 GPUs for 30 days (~75% of AF3 costs), so training much models is still firmly out of reach for most of us.

They also have a really cool ability to constrain predictions based on (potentially proprietary) experimental data, giving holders of such data a potential advantage on difficult targets. They explain this nicely in the paper:

We also add new training features, designed to mimic experimental constraints. These include pocket, contact, and docking constraints, which capture varying granularity of interactions between entities in a complex. […] During inference, these constraints can be specified using prior knowledge or information gained from experiments such as hydrogen-deuterium exchange, mass spectrometry or cross-linking mass spectrometry.

Closing Thoughts

With competition heating up, it’s likely that other replication efforts are eager to release their models soon, each vying to establish their version as the new standard. We have yet to hear from the Baker Lab or OpenFold Consortium4, although I am sure they are working on something.

As of now, DeepMind has not provided any updates on their plans to release the model code and weights “within 6 months” of the paper’s release, which would mean by mid-November at the latest. It remains to be seen whether they will accelerate their timeline if another implementation begins to gain traction as the preferred model in the research community.

Reminder that this does not equal true single sequence structure prediction as the language model has effectively memorised the MSA. It is basically parameterised v.s. non-parameterised storage of evolutionary information.

This has the usual stipulations about non-commercial use, but also explicitly bans using Chai-1 outputs to train any “neural network […] with more than 10,000 trainable parameters“ or improve any “technology for protein structure prediction or protein, drug, or enzyme design“.